LLM agents require a wide range of capabilities for executing tasks autonomously. They must be able to decompose complex user instructions, plan actions, interact with their environment using tools, call tools with correct arguments, reason about observations and adjust planning if needed.

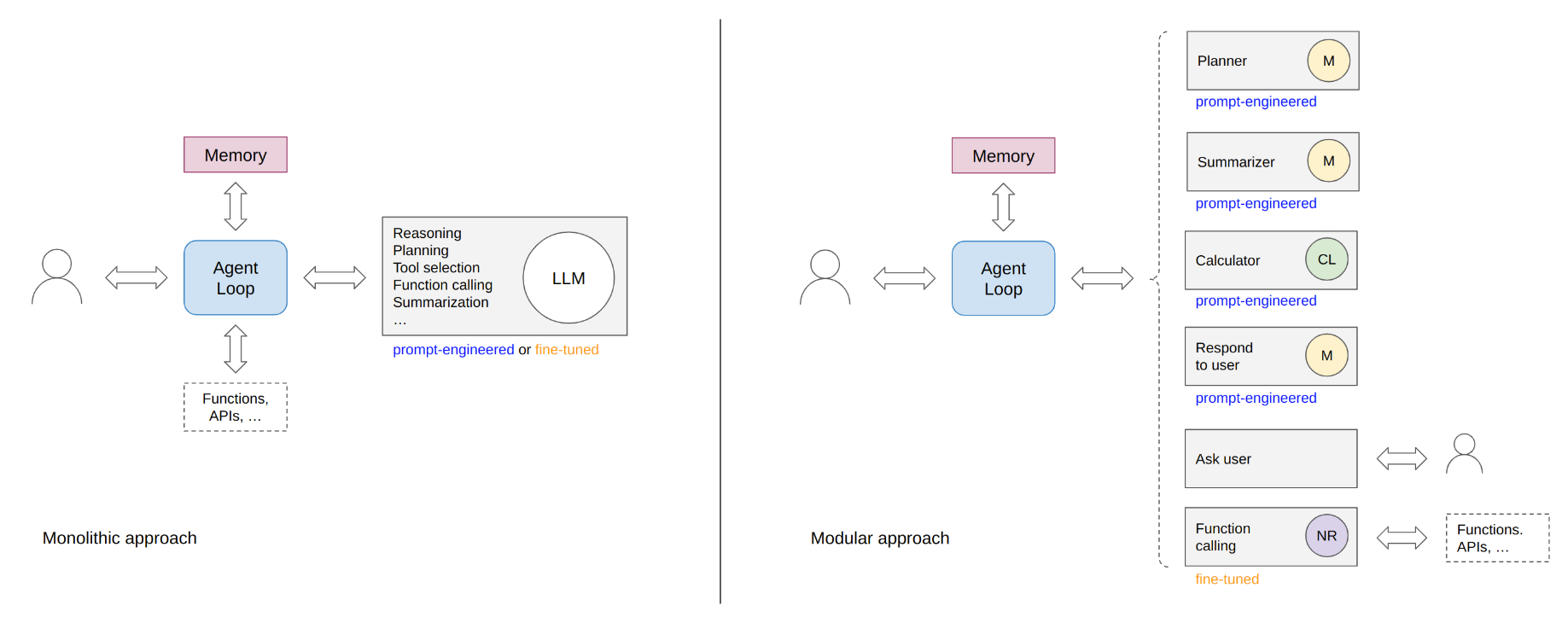

Instructing an LLM to behave like an agent is often done with comprehensive, sometimes monolithic prompts. Commercial LLMs like GPT-4 are more capable of understanding such complex prompts and have important features like function calling already built in. Open LLMs, especially smaller ones, still struggle to do so. An alternative is to fine-tune smaller open LLMs on agent trajectories created by larger models. Common to both approaches however is that it’s still a monolithic expert providing all the diverse capabilites of an agent (Figure 1, left).

Inspired by prompt chaining, I experimented with separating planning from function calling in ReAct-style agents. This separation of concerns makes the planner module of an agent responsible only for describing the task of the next step in an informal way and selecting an appropriate tool for that step, without having to deal with function calling details. The main idea is to reduce the responsibilites of a planner module as far as possible so that smaller LLMs can be better utilized for planning.

Translating the task description into a function call is the responsibility of the selected tool. It encapsulates all the tool-specific detailed knowledge required for extracting function call arguments from the task description and previous agent activities. Call argument extraction can either be done in a generic way with specialized function calling models, like NexusRaven-v2 for example, or in a very tool-specific way, for example when a task description must be translated into code for numeric calculations.

This decomposition of an agent into highly specialized modules is shown on the right side of Figure 1 and further described in section Modules. The implementation used in this article makes heavy use of schema-guided generation. This ensures that the output generated by LLM-based modules follow an application-defined schema which makes communication between modules more reliable.

Figure 1. Monolithic approach (left) vs modular approach, as used in this article (right). M: Mistral-7B-Instruct-v0.2, CL: CodeLlama-7B-instruct, NR: NexusRaven-V2-13B.

Modules

At the core of the system is a ReAct-style agent loop that uses a planning module to plan actions. An action is defined by a selected tool and a task description. Executing the selected tool results in an observation. The agent uses short-term memory for recording task-observation pairs (scratchpad) and conversational memory for recording interactions with the user.

Planner

The planner reasons about the user request, summarizes relevant information from previous task-observation pairs, thinks about the next useful steps (CoT), generates a task description for the very next step and selects an appropriate tool based on based on a short and high-level tool description (sample prompt and completion). This information is then returned to the agent loop which executes the selected tool. The planner uses a zero-shot prompted Mistral-7B-Instruct-v0.2 model.

Summarizer

Observations i.e. tool execution results can vary significantly in size and relevance. For example, a calculator may output a single number whereas a search engine may return large amounts of text, most of it not relevant for the current task. A summarizer uses that observation to formulate a short, task-specific answer (sample prompt and completion). This makes it much easier for the planner to reason over past task-observation pairs. The summarizer uses a zero-shot prompted Mistral-7B-Instruct-v0.2 model.

Tools

Most modules of the agent are tools and the system can be extended with further tools by implementing a common tool interface. During execution, a tool gets access to the user request, the current task description and the agent’s scratchpad. It is up to the tool implementation to make use of all or only a subset of the provided information.

Function call

The function call tool wraps a user-defined function into a tool interface so that it can be selected by the planner. It binds information from the task description and previous observations to function parameters. The default implementation uses NexusRaven-V2-13B for that purpose, an LLM fine-tuned for function calling from natural language instructions (sample prompt and completion). An alternative implementation uses a zero-shot prompted CodeLlama-7B-instruct model to generate function call arguments from instructions.

Calculate

The calculate tool generates and executes Python code from a mathematical task description and previous (numerical) observations. The current implementation supports calculations that result in a single number (sample prompt and completion). Python code running this calculation is generated with a CodeLlama-7B-instruct model. Code execution is not sandboxed; use this component at your own risk.

Ask user

This tool is used when the planner needs further input from the user. It doesn’t use an LLM and simply returns the user’s answer to the agent (code).

Respond to user

A tool that generates a final answer to the original request using all previous task-observation pairs (code, sample prompt and completion). This tool uses a zero-shot prompted Mistral-7B-Instruct-v0.2 model for generating the answer.

Examples

All models used by the modular agent are running on a llama.cpp server. Instructions for serving these LLMs are available here.

from langchain_experimental.chat_models.llm_wrapper import Llama2Chat

from gba.chat import MistralInstruct

from gba.llm import LlamaCppClient

# Proxy for 8-bit quantized Mistral-7B-Instruct-v0.2

mistral_instruct = MistralInstruct(

llm=LlamaCppClient(url="http://localhost:8081/completion", temperature=-1),

)

# Proxy for 4-bit quantized CodeLlama-7B-Instruct

code_llama = Llama2Chat(

llm=LlamaCppClient(url="http://localhost:8088/completion", temperature=-1),

)

# Proxy for 8-bit quantized NexusRaven-V2-13B

nexus_raven = LlamaCppClient(url="http://localhost:8089/completion", temperature=-1)

Custom functions used in this example are create_event for adding events to a calendar, search_internet for searching documents matching a query, and search_images for searching images matching a query. To keep this example simple and to avoid dependencies to external APIs, search_internet searches for documents in a local document store, search_images and create_event are mocked. You can replace them with other implementations or add new functions as you like.

from gba.agent import Agent

from gba.search import SearchEngine

from gba.store import DocumentStore

from gba.summary import ResultSummarizer

from gba.tools import *

from example_docs import DOCUMENTS

from example_funcs import create_event

store = DocumentStore(path=".chroma")

engine = SearchEngine(store=store)

if store.count() == 0:

for i, document in enumerate(DOCUMENTS):

store.add(identifier=str(i), document=document)

summarizer = ResultSummarizer(model=mistral_instruct)

tools = [

AskTool(),

CalculateTool(model=code_llama, summarizer=summarizer),

FunctionCallTool(model=nexus_raven, fn=create_event),

FunctionCallTool(model=nexus_raven, fn=engine.search_images),

FunctionCallTool(model=nexus_raven, fn=engine.search_internet, summarizer=summarizer),

RespondTool(model=mistral_instruct),

]

agent = Agent(model=mistral_instruct, tools=tools)

Multiple tool use

agent.run("Who is Leonardo DiCaprio's current girlfriend and "

"what is her age raised to the power of 0.7?")

Task: Search the internet for information about Leonardo DiCaprio's current girlfriend.

Call: search_internet(query='Leonardo DiCaprio\'s current girlfriend')

Observation: Leonardo DiCaprio's current girlfriend is Italian model Vittoria Ceretti.

Task: Search the internet for information about Vittoria Ceretti's age.

Call: search_internet(query='Vittoria Ceretti')

Observation: Vittoria Ceretti is 25 years old.

Task: Calculate the value of 25 raised to the power of 0.7.

```python

result = 25 ** 0.7

```

Observation: The value of 25 raised to the power of 0.7 is approximately 9.51827.

'The current girlfriend of Leonardo DiCaprio is Vittoria Ceretti, and the value of her age (which is 25) raised to the power of 0.7 is approximately 9.51827.'

agent.run("How many years did octopuses appear earlier "

"on Earth, compared to elephants?")

Task: Search the internet for the first appearance dates of octopuses and elephants on Earth.

Call: search_internet(query='first appearance dates of octopuses and elephants on Earth')

Observation: Octopuses first appeared around 155 million years ago during the Cretaceous period.

Task: Search the internet for the first appearance date of elephants on Earth.

Call: search_internet(query='first appearance date of elephants on Earth')

Observation: Elephants first appeared on Earth around 55 million years ago.

Task: Calculate the difference in years between the first appearance of octopuses (around 155 million years ago) and the first appearance of elephants (around 55 million years ago).

```python

result = 155 - 55

```

Observation: The difference in years between the first appearance of octopuses (around 155 million years ago) and the first appearance of elephants (around 55 million years ago) is approximately 100 million years.

'Octopuses first appeared around 100 million years earlier than elephants.'

Single tool use

More precisely, use the single tool in addition to the respond to user tool.

agent.run("Add Martin's birthday party, starting Dec. 17th 2023 8pm, "

"to my calendar and respond with a one-line invitation")

Task: Creating Martin's birthday party event on Dec. 17th, 2023 at 8pm and generating a one-line invitation.

Call: create_event(title='Martin\'s birthday party', date='Dec. 17th, 2023', time='8pm')

Observation: Event Martin's birthday party successfully added to calendar, date=Dec. 17th, 2023, time=8pm

"You're invited to Martin's birthday party on Dec. 17th, 2023 at 8pm."

Respond directly

agent.run("Tell me a joke")

"Why don't scientists trust atoms? Because they make up everything!"

Request user feedback

Asks the user to provide additional input required to complete the request.

agent.run("Tell me a joke about my best friend")

Could you please provide some details about your best friend, such as their name or any quirks they have?: Michael

Observation: Michael

Could you please tell me any funny or unique traits that your best friend Michael has?: works at home

Observation: works at home

Task: Searching the internet for a joke about someone who works from home.

Call: search_internet(query='joke about someone who works from home')

Observation: I'm sorry, I couldn't find a joke about someone who works from home in the provided text.

Could you please provide any additional details about your best friend Michael that might help me create a joke?: eats chickens

Observation: eats chickens

'Why did Michael the chicken farmer laugh while working from home? Because he was raising a good cluck of business!'

Conversational tool use

Stores conversational state in a memory module separated from the agent’s scratchpad. Conversational memory must be explicitly enabled with conversational=True.

conversational_agent = Agent(model=mistral_instruct, tools=tools, conversational=True)

conversational_agent.run("I want an image of a dog")

Task: Searching for an image of a dog...

Call: search_images(query='dog')

Observation: [dog_1.jpg](https://example.com/dog_1.jpg)

'Here is the image you requested: [dog_1.jpg](https://example.com/dog_1.jpg)'

conversational_agent.run("It should be brown")

Task: Searching for an image of a brown dog.

Call: search_images(query='brown dog')

Observation: [brown_dog_1.jpg](https://example.com/brown_dog_1.jpg)

'Here is an image of a brown dog: [brown_dog_1.jpg](https://example.com/brown_dog_1.jpg)'

conversational_agent.run("Find an image with two of them")

Task: Search for an image of two brown dogs.

Call: search_images(query='two brown dogs')

Observation: [two_brown_dogs_1.jpg](https://example.com/two_brown_dogs_1.jpg)

'Here is an image of two brown dogs: [two_brown_dogs_1.jpg](https://example.com/two_brown_dogs_1.jpg)'

Improvements

The current implementation is very simple and minimalistic. Potential improvements, in addition to planner fine-tuning, include but are not limited to:

- Better planning via with tree search like in DFSDT or LATS, for example.

- Self-improvement via self-reflection or action learning, for example.

- Function or API selection from a large database instead of enumerating them in the planner prompt.

- Better code LLM for the calculator tool to supporting more complex instructions and result types.

- Leverage nested and parallel function calls with NexusRaven-V2.

- …

Improvements can be implemented via prompt engineering and/or fine-tuning. A later version may also implement the agent modules as Langchain-compatible chains and tools, and the agent itself as Langchain agent, but in the current experimentation phase I prefer the flexibility of a light-weight custom implementation.

Conclusion

Separating planning from function calling concerns reduces the responsibilities of a planner module and supports usage of smaller LLMs for planner implementation. This article demonstrated how to elicit multi-step planning behavior from a general-purpose instruction-tuned LLM like Mistral-7B-Instruct-v0.2, without further fine-tuning. In a follow-up article, I’ll show how a planner module can be fine-tuned on synthetic data.